One of our key missions is to curate open data sources and provide the back to the world so that the brilliant thought leaders in the industry can use data more effectively and efficiently. This means taking the data in its native format (typically XML, txt, csv, Excel), loading it into our Oracle relational database, standardizing the data to important entities like person, place, organization, drug, disease, and then providing that data for download in a standard text file format.

Since we want other companies to be downloading and using our data, I figured I should go through the process myself and see how long it would take me to download and load some karmadata. I chose one of my favorite datasets, ClinicalTrials.gov, which is published by the National Institutes of Health. I chose ClinicalTrials.gov because it is published in XML with a fairly complex schema and there are plenty of free text fields that make standardization ultra-difficult and important.

We’ve attempted to make getting off the ground with karmadata as quick and easy as possible. Our Toolkit contains all the metadata that you should need, as well as the SQL scripts to load the data (currently complete for Oracle, but will be completed for SQL Server, MySQL, etc. in the near future). The hope was that someone could download the data, load it into a relational database, and answer a hard to answer question in less than a day.

I began by heading to karmadata.com and cruising the available files on the download page. Knowing that I wanted to load ClinicalTrials.gov, I clicked into the Source Files section to view the raw source data, and then to Fact Files sections to check out the standardized records that accompany it. I downloaded all of the available files.

Next I downloaded the files provided in the Toolkit section. I read the readme.doc to take me through the process. I found it to be extremely well written. Whoever authored it must be incredibly brilliant and good looking. I identified the scripts for creating the tables for fact and source data, as well as the external table scripts to load the data into those tables.

Then I got started. I created the tables for loading, unzipped the first period of data, and ran the inserts to load the data. Rather than programmatically unzipping and loading the data, I simply manually unzipped and ran the inserts as I went.

Ten minutes after I read the readme document, I had the entire ClinicalTrials.gov dataset loaded into a relational database, and best of all it was standardized to entities for sponsor organization, clinical sites, clinical investigators, geography, disease, drug, and time.

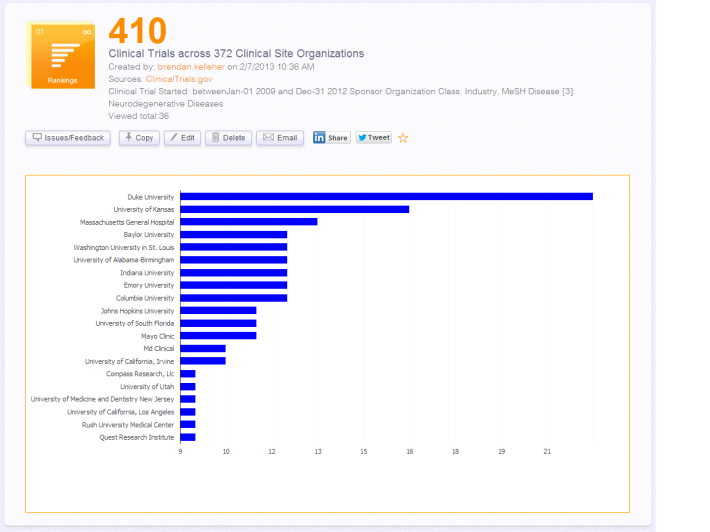

Now the fun part. The last thing that we provide in the toolkit is a couple of queries to get you started to play around with the data. In this case we ask the question, which are the leading sites in running industry sponsored, neurodegenerative disease trials, from 2009 to 2012? I run the query, and boom, I’m looking at a list that looks like a less attractive version of this data visualization.

You could download ClinicalTrials.gov from karmadata, or you could just create this data visualization on karmadata

Now just to recap what it would take to run that from scratch, you would need to go to ClinicalTrials.gov, download the entire dataset in XML, load the XML into a relational database, standardize the start dates to dates, standardize the many versions of each site name to a standard identifier, then group together all of the MeSH terms that fall under neurodegenerative diseases, and then run a query similar to the one we provided.

These are enormous barriers to entry to a functional, effective way of using the data. But what took us countless hours of development, can take you about 10 minutes. (Or you could just find or create a datacard on karmadata.com in about 10 seconds, but you get the point.)

Using our service was a little surreal for me. I was downloading data that I had downloaded, loaded, and standardized, and then was loading it back into an Oracle database. But it left me wishing that I could just use something like karmadata instead of dealing with all the pains that come with unstandardized data sets. Hopefully it will make you feel the same way.

This is one of many nice examples of curated/standardized feeds of CT.gov data. See also http://linkedct.org , http://www.patientslikeme.com/clinical_trials , http://www.clinicalcollections.org/trials/ etc.. Most of them do a good job in turning “strings to things” and a few of them apply the Linked Data principles http://en.wikipedia.org/wiki/Linked_data#Principles. However, I don’t think any of them use http-based URIs to identify things such as sponsor organization, clinical sites, clinical investigators, geography, disease, drug, and time.

I argue that we as a community caring for clinical trials data should push back to FDA and NLM to get a official standardized, linked data interface directly to the CT.gov at source. And yes, also for FDA and NLM to push back to pharma companies to provide standardized data about our trials with URIs to identify things instead of all these text strings.

So, given the whole clinical trial transparency movement I push hard for 5-star Linked Open Data http://5stardata.info/ both for the descriptive data about trials, and for the clinical trials data on summary level and on trials participant level. Hence my engagement in pushing CDISC in this direction and W3C HCLS engagement with the FDA, see http://semanticweb.com/clinical-studies-and-the-road-to-linked-data_b35252

We are totally on board with the mission of pushing back at the source. It is a very challenging endeavor as the data is highly un-standardized, and the publishers are slow or unresponsive to fix. We try to avoid bastardizing the data/making assumptions as much as possible, but ultimately it is impossible to rely on the publisher. In this same vein, we always standardize to industry accepted taxonomies where possible (ex MeSH, UN geography, etc.).

I don’t want to knock the NLM too much on this though. They do a terrific job with a lot of this data. The MeSH coding in PubMed and ClinicalTrials.gov goes above and beyond the call of duty, in my opinion. ClinicalTrials.gov has a lot of really cool functionality. But there are certainly many limitations, so we are just trying to reduce those limitations.

We love the work that the Lilly COI team is doing with Clinical Collections. Very neat stuff, and hard to believe that it originates from big pharma! I hadn’t realized that patientslikeme had incorporated CTGov data, but that’s a terrific application of the data. I hadn’t visited LinkedCT in a while, but that work is very impressive! The more people that engage with the data, and do cool things with it, the better it will be for the industry.

This is impressive. I agree the defiencies of clinicaltrials.gov are no one’s “fault” (we descibed some of these in http://www.citeulike.org/user/cdsouthan/article/10423875). It also looks as though there is a strong following wind to improve things. Still some way to go to tighten up a) what molecular mappings are available and, b) in the spirit of transparency, un-blinding those that aren’t (http://www.ncbi.nlm.nih.gov/pubmed/23159359)